Blog

Explore the ethical considerations and the role Plato is playing in helping institutions address these challenges in the first of a three-part series of blogs on some of the most pressing ethical considerations of using AI in education.

Nikita Dumitriuc

20 Nov 2024

The role of artificial intelligence (AI) in education is growing rapidly, especially within university settings, where it has the potential to revolutionise how students learn, interact with faculty, and engage with teaching materials. From learning platforms and AI-powered tutoring systems to automated grading and administrative support, AI has the potential to become an integral tool in modern education.

However, as AI’s influence expands, it raises significant ethical concerns that need careful consideration. Three of the most critical ethical issues of AI education are academic integrity, bias and the preservation of human interaction in learning.

This is the first part of our three-part blog series on AI ethics in education. In this series, we’ll explore the critical ethical considerations surrounding AI in education, including academic integrity, bias, and the preservation of human connection. Today, we focus on a crucial topic: bias in AI systems. We’ll examine how AI bias arises, its implications for education, and the steps solutions like Plato take to ensure fairness and inclusivity.

Biases

There are two main types of AI biases, algorithmic and data bias. Algorithmic bias arises form the design and implementation of the AI algorithm themselves, including the choice of features, structure of the model, and optimisation criteria. Data bias occurs when an AI system produces outcomes that are systematically prejudiced due to flawed assumptions in the machine learning process or biased data. In the context of education, data bias can emerge when AI is trained on data that reflects existing societal biases, which the system then replicates and magnifies.

One of the most pressing concerns with bias in education is the potential for AI-driven grading systems to disproportionately affect certain demographic groups. Biased grading algorithms may unintentionally penalize students if an AI grading system is trained on past exams that predominantly reflect the language, academic experiences, or cultural context of one group of students, it might not properly assess the work of students from different backgrounds. This can lead to unfair grading and potentially widen achievement gaps.

To prevent AI from reinforcing existing biases, it is critical that AI systems are designed with diverse and representative datasets. This means ensuring that the data used to train AI algorithms includes a wide range of student demographics. By incorporating diverse data, AI systems can avoid perpetuating biases and ensure that the learning experiences they offer are fair and inclusive. For example, an AI tool used in a classroom setting should be trained on data that represents a variety of academic backgrounds, learning disabilities, languages, and cultural perspectives, ensuring that all students can benefit equally.

The Digital Education Council, a global alliance dedicated to advancing innovation in education, brings together leading institutions such as the University of Nottingham, Bocconi University, and King’s College London. In its recently published 30 Global Challenges report, the DEC identifies the lack of ethical AI frameworks as the most significant issue facing higher education today - an aspect also highlighted in one of our previous blogs as a critical aspect to enable AI adoption in HE. This challenge underscores the urgent need for clear guidelines to govern the ethical development and implementation of AI technologies in academic environments. Notably, "Bias in data and AI models" ranks as the sixth most critical challenge, highlighting concerns about fairness, inclusivity, and the potential for AI to inadvertently perpetuate existing inequalities. These findings emphasize the importance of addressing ethical considerations to ensure that AI serves as a force for equity and innovation in education.

At Plato, we are committed to ensuring that our AI operates with fairness, transparency, and accuracy. To minimise bias and enhance the effectiveness of our AI, we have taken a proactive approach by combining the latest, most advanced AI models with the specific, structured learning resources already available within a university's Virtual Learning Environment (VLE). This includes course materials such as lectures, seminar notes, lecture transcripts, and additional study resources.

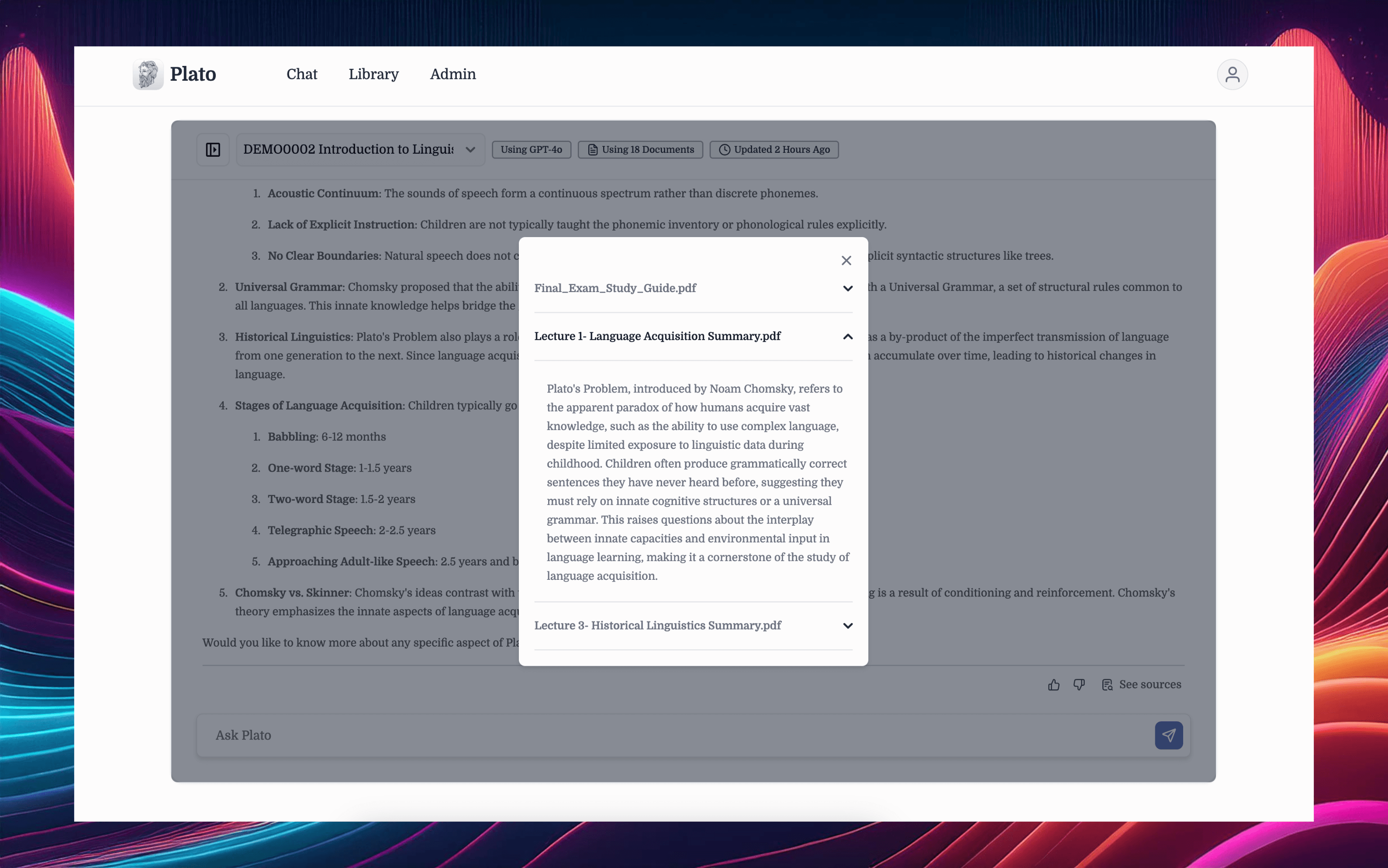

When students interact with Plato, the AI first analyses the relevant educational content directly from the VLE, whether it’s Canvas, Blackboard, or Moodle. This ensures that the AI is grounded in the most accurate and contextually relevant information available to the student. Only after analysing this module-specific content does Plato seek supplementary answers from a broader language model, if necessary.

Crucially, Plato provides students with clear sources where the information comes from, allowing them to cross-reference the AI’s response with the original sources in the VLE. This gives students the ability to verify information on their own time and deepen their understanding of the material.

This dual-layer approach helps to ensure that Plato’s responses are not only accurate but also rooted in the specific context of the course materials, preventing the risk of biases introduced by generalised data. Moreover, we make it clear when a response is drawn solely from module resources versus when it includes broader information. This transparency ensures that students can trust that the AI is providing them with the most relevant and reliable support, without inadvertently introducing external biases.

Wrap-up

The integration of AI into education presents both immense opportunities and complex ethical challenges. While AI has the potential to change learning through support, efficient resource management, and enhanced accessibility, it also raises critical questions about academic integrity, bias, and the preservation of human connections. Addressing these issues is essential to ensure AI’s positive impact on students and educators alike.

We believe Plato exemplifies how AI can be deployed ethically and effectively. By prioritising fairness and transparency, Plato ensures that its tools support academic integrity, mitigate biases, and complement human educators. Features such as context-aware answers, clear citations, and customisation reflect Plato’s commitment to ethical practices and student empowerment.

Looking ahead, AI’s role in education will undoubtedly expand, offering new ways to enhance learning experiences. However, this progress must be guided by responsible and ethical practices to ensure that AI serves as a force for inclusion, equity, and academic excellence. Together, educators and solution providers can shape a future where AI enriches education without compromising its core values.

If you're interested in seeing how AI can transform learning book a demo of Plato today. Experience firsthand how Plato empowers students, supports educators, and ensures academic integrity through responsible AI design.